keras IMDB

情緒分析 setiment analysis 又稱為意見探勘 opinionmining,是以自然語言處理、文字分析的方法,找出作者在某些話題的態度、情感、評價或情緒。可讓廠商提早得知客戶對公司或產品的觀感。

IMDb: Internet Movie Database是線上電影資料庫,始於 1990 年,1998 年起,成為 amazon 旗下的網站,收錄了 4百多萬筆作品資料。

IMDb 資料及共有 50000 筆影評文字,分訓練與測試資料各 25000 筆,每一筆資料都被標記為「正面評價」或「負面評價」

keras 處理 IMDb 的步驟

Step 1. 讀取資料集

訓練資料

train_text (文字):0~12499 筆:正面評價文字,12500~24999 筆:負面評價文字

y_train(標籤):0~12499 筆:正面評價都是 1,12500~24999 筆:負面評價,都是 0

測試資料

test_text (文字):0~12499 筆:正面評價文字,12500~24999 筆:負面評價文字

y_test(標籤):0~12499 筆:正面評價都是 1,12500~24999 筆:負面評價,都是 0

Step 2. 建立 token

因為深度學習模型只能接受數字,必須將影評文字,轉換為數字 list。翻譯前,要先製作字典。keras 提供 Tokenizer module,就是類似字典的功能

建立 token 的方式如下:

要指定字典的字數,例如 2000 字的字典

讀取 25000 筆訓練資料,依照每一個英文單字,在所有影評中出現的次數,進行排序,排序前 2000 名的英文字,就列入字典中

因為是依照出現次數排序建立的字典,可說是影評的常用字字典

利用字典進行轉換: ex: 'the' 換為 1, 'is' 換為 6

如果單字沒有在字典中,就不進行轉換。

Step 3. 使用 token 將「影評文字」轉換為「數字list」

Step 4. 截長補短,讓所有「數字list」長度變成 100

因為影評文字的長度不固定,後續要將數字 list 轉換為「向量list」,長度必須固定,方法很簡單,就是截長補短

例如將長度定為 100,如果數字 list 長度為 59,就在前面補上 41 個 0。如果list 長度為 126,就將前面 26 個數字截掉

Step 5. 使用 Embedding 層,將「數字 list」轉換為「向量list」

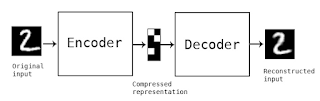

Word Embedding 是一種自然語言處理技術,原理是將文字映射成多維幾何空間的向量。類似語意的文字的向量,在多維幾何空間中的距離也比較近。影評文字轉換為數字 list後,數字沒有語意關聯,為了得到關聯性,要轉換為向量。語意相近的詞語,向量會比較接近。

ex:

pleasure -> 38 -> (1.2, 2.3, 3.2)

dislike -> 21 -> (-1.21, 2.7, 3.2)

like -> 10 -> (1.25, 2.33, 3.4)

hate -> 28 -> (-1.5, 2.63, 3.22)Step 6. 將「向量 list」送入深度學習模型

資料預處理

# get imdb data

import urllib.request

import os

import tarfile

# 下載資料集

import os

if not os.path.exists('data'):

os.makedirs('data')

url="http://ai.stanford.edu/~amaas/data/sentiment/aclImdb_v1.tar.gz"

filepath="data/aclImdb_v1.tar.gz"

if not os.path.isfile(filepath):

result=urllib.request.urlretrieve(url,filepath)

print('downloaded:',result)

if not os.path.exists("data/aclImdb"):

tfile = tarfile.open("data/aclImdb_v1.tar.gz", 'r:gz')

result=tfile.extractall('data/')

from keras.datasets import imdb

from keras.preprocessing import sequence

from keras.preprocessing.text import Tokenizer

## rm_tags 可移除文字中的 html tag

import re

def rm_tags(text):

re_tag = re.compile(r'<[^>]+>')

return re_tag.sub('', text)

# filetype 為 train 或 test

import os

def read_files(filetype):

path = "data/aclImdb/"

file_list=[]

# 取得檔案 list

positive_path=path + filetype+"/pos/"

for f in os.listdir(positive_path):

file_list+=[positive_path+f]

negative_path=path + filetype+"/neg/"

for f in os.listdir(negative_path):

file_list+=[negative_path+f]

print('read',filetype, 'files:',len(file_list))

# 產生 labels,前面 12500 筆為 1,後面 12500 筆為 0

all_labels = ([1] * 12500 + [0] * 12500)

# 讀取檔案內容,並去掉 html tag

all_texts = []

for fi in file_list:

with open(fi,encoding='utf8') as file_input:

all_texts += [rm_tags(" ".join(file_input.readlines()))]

return all_labels,all_texts

y_train,train_text=read_files("train")

y_test,test_text=read_files("test")

# #查看正面評價的影評

# print()

# print("train_text[0]=",train_text[0], ", y_train[0]=", y_train[0])

# #查看負面評價的影評

# print("train_text[12500]=", train_text[12500], ", y_train[12500]=", y_train[12500])

###

# 建立 token

# 先讀取所有文章建立字典,限制字典的數量為 nb_words=2000

token = Tokenizer(num_words=2000)

token.fit_on_texts(train_text)

print()

print("token.document_count=", token.document_count)

## token.document_count= 25000

# print("token.word_index=", token.word_index)

# 將每一篇文章的文字轉換一連串的數字

# 只有在字典中的文字會轉換為數字

x_train_seq = token.texts_to_sequences(train_text)

x_test_seq = token.texts_to_sequences(test_text)

print()

print(train_text[0])

print(x_train_seq[0])

# 讓轉換後的數字長度相同

#文章內的文字,轉換為數字後,每一篇的文章地所產生的數字長度都不同,因為後需要進行類神經網路的訓練,所以每一篇文章所產生的數字長度必須相同

#以下列程式碼為例maxlen=100,所以每一篇文章轉換為數字都必須為100

#如果文章轉成數字大於0,pad_sequences處理後,會truncate前面的數字

x_train = sequence.pad_sequences(x_train_seq, maxlen=100)

x_test = sequence.pad_sequences(x_test_seq, maxlen=100)

print()

print('before pad_sequences length=',len(x_train_seq[0]))

print(x_train_seq[0])

print('after pad_sequences length=',len(x_train[0]))

print(x_train[0])

print()

print('before pad_sequences length=',len(x_train_seq[1]))

print(x_train_seq[1])

print('after pad_sequences length=',len(x_train[1]))

print(x_train[1])

####

## 資料預處理

# token = Tokenizer(num_words=2000)

# token.fit_on_texts(train_text)

# x_train_seq = token.texts_to_sequences(train_text)

# x_test_seq = token.texts_to_sequences(test_text)

# x_train = sequence.pad_sequences(x_train_seq, maxlen=100)

# x_test = sequence.pad_sequences(x_test_seq, maxlen=100)

以 MLP 進行情感分析

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding_1 (Embedding) (None, 100, 32) 64000

_________________________________________________________________

dropout_1 (Dropout) (None, 100, 32) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 3200) 0

_________________________________________________________________

dense_1 (Dense) (None, 256) 819456

_________________________________________________________________

dropout_2 (Dropout) (None, 256) 0

_________________________________________________________________

dense_2 (Dense) (None, 1) 257

=================================================================

Total params: 883,713

Trainable params: 883,713

Non-trainable params: 0# get imdb data

import urllib.request

import os

import tarfile

# 下載資料集

import os

if not os.path.exists('data'):

os.makedirs('data')

url="http://ai.stanford.edu/~amaas/data/sentiment/aclImdb_v1.tar.gz"

filepath="data/aclImdb_v1.tar.gz"

if not os.path.isfile(filepath):

result=urllib.request.urlretrieve(url,filepath)

print('downloaded:',result)

if not os.path.exists("data/aclImdb"):

tfile = tarfile.open("data/aclImdb_v1.tar.gz", 'r:gz')

result=tfile.extractall('data/')

from keras.datasets import imdb

from keras.preprocessing import sequence

from keras.preprocessing.text import Tokenizer

## rm_tags 可移除文字中的 html tag

import re

def rm_tags(text):

re_tag = re.compile(r'<[^>]+>')

return re_tag.sub('', text)

# filetype 為 train 或 test

import os

def read_files(filetype):

path = "data/aclImdb/"

file_list=[]

# 取得檔案 list

positive_path=path + filetype+"/pos/"

for f in os.listdir(positive_path):

file_list+=[positive_path+f]

negative_path=path + filetype+"/neg/"

for f in os.listdir(negative_path):

file_list+=[negative_path+f]

print('read',filetype, 'files:',len(file_list))

# 產生 labels,前面 12500 筆為 1,後面 12500 筆為 0

all_labels = ([1] * 12500 + [0] * 12500)

# 讀取檔案內容,並去掉 html tag

all_texts = []

for fi in file_list:

with open(fi,encoding='utf8') as file_input:

all_texts += [rm_tags(" ".join(file_input.readlines()))]

return all_labels,all_texts

y_train,train_text=read_files("train")

y_test,test_text=read_files("test")

####

## 資料預處理

token = Tokenizer(num_words=2000)

token.fit_on_texts(train_text)

# 轉成數字 list

x_train_seq = token.texts_to_sequences(train_text)

x_test_seq = token.texts_to_sequences(test_text)

# 截長補短

x_train = sequence.pad_sequences(x_train_seq, maxlen=100)

x_test = sequence.pad_sequences(x_test_seq, maxlen=100)

## 建立模型

from keras.models import Sequential

from keras.layers.core import Dense, Dropout, Activation,Flatten

from keras.layers.embeddings import Embedding

model = Sequential()

# 將 Embedding 層加入模型

# output_dim=32 讓 數字 list 轉輸出成 32 維向量

# input_dim=2000 輸入字典共 2000 字

# input_length=100 因為每一筆資料有 100 個數字

model.add(Embedding(output_dim=32,

input_dim=2000,

input_length=100))

# Dropout 可避免 overfitting

model.add(Dropout(0.2))

#### 多層感知模型

# 平坦層

# 因為數字 list 為 100,每一個數字轉成 32 維向量

# 100*32 = 3200 個神經元

model.add(Flatten())

# 隱藏層

# 有 256 個神經元

model.add(Dense(units=256,

activation='relu' ))

model.add(Dropout(0.2))

## 輸出層 1 個神經元

model.add(Dense(units=1,

activation='sigmoid' ))

print(model.summary())

### 訓練模型

model.compile(loss='binary_crossentropy',

optimizer='adam',

metrics=['accuracy'])

# validation_split=0.2 80% 是訓練資料, 20% 是驗證資料

train_history =model.fit(x_train, y_train,batch_size=100,

epochs=10,verbose=2,

validation_split=0.2)

####################

## 這兩行可解決 ModuleNotFoundError: No module named '_tkinter'

## ref: https://stackoverflow.com/questions/36327134/matplotlib-error-no-module-named-tkinter

import matplotlib

matplotlib.use('agg')

####

import matplotlib.pyplot as plt

def show_train_history(train_acc,test_acc, filename):

plt.clf()

plt.gcf()

plt.plot(train_history.history[train_acc])

plt.plot(train_history.history[test_acc])

plt.title('Train History')

plt.ylabel('Accuracy')

plt.xlabel('Epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.savefig(filename)

show_train_history('acc','val_acc', 'accuracy.png')

show_train_history('loss','val_loss', 'loss.png')

######

# 評估模型準確率

print()

scores = model.evaluate(x_test, y_test, verbose=1)

print("scores[1]=", scores[1])

## scores[1]= 0.81508

####

# 預測機率

probility=model.predict(x_test)

for p in probility[12500:12510]:

print(p)

## 預測結果

print()

predict=model.predict_classes(x_test)

predict_classes=predict.reshape(-1)

print( predict_classes[:10] )

## 查看預測結果

SentimentDict={1:'正面的',0:'負面的'}

def display_test_Sentiment(i):

print()

print(test_text[i])

print('標籤label:',SentimentDict[y_test[i]],

'預測結果:',SentimentDict[predict_classes[i]])

display_test_Sentiment(2)

display_test_Sentiment(12502)

#預測新的影評

def predict_review(input_text):

input_seq = token.texts_to_sequences([input_text])

pad_input_seq = sequence.pad_sequences(input_seq , maxlen=100)

predict_result=model.predict_classes(pad_input_seq)

print()

print(SentimentDict[predict_result[0][0]])

predict_review('''

Oh dear, oh dear, oh dear: where should I start folks. I had low expectations already because I hated each and every single trailer so far, but boy did Disney make a blunder here. I'm sure the film will still make a billion dollars - hey: if Transformers 11 can do it, why not Belle? - but this film kills every subtle beautiful little thing that had made the original special, and it does so already in the very early stages. It's like the dinosaur stampede scene in Jackson's King Kong: only with even worse CGI (and, well, kitchen devices instead of dinos).

The worst sin, though, is that everything (and I mean really EVERYTHING) looks fake. What's the point of making a live-action version of a beloved cartoon if you make every prop look like a prop? I know it's a fairy tale for kids, but even Belle's village looks like it had only recently been put there by a subpar production designer trying to copy the images from the cartoon. There is not a hint of authenticity here. Unlike in Jungle Book, where we got great looking CGI, this really is the by-the-numbers version and corporate filmmaking at its worst. Of course it's not really a "bad" film; those 200 million blockbusters rarely are (this isn't 'The Room' after all), but it's so infuriatingly generic and dull - and it didn't have to be. In the hands of a great director the potential for this film would have been huge.

Oh and one more thing: bad CGI wolves (who actually look even worse than the ones in Twilight) is one thing, and the kids probably won't care. But making one of the two lead characters - Beast - look equally bad is simply unforgivably stupid. No wonder Emma Watson seems to phone it in: she apparently had to act against an guy with a green-screen in the place where his face should have been.

''')

predict_review('''

It's hard to believe that the same talented director who made the influential cult action classic The Road Warrior had anything to do with this disaster.

Road Warrior was raw, gritty, violent and uncompromising, and this movie is the exact opposite. It's like Road Warrior for kids who need constant action in their movies.

This is the movie. The good guys get into a fight with the bad guys, outrun them, they break down in their vehicle and fix it. Rinse and repeat. The second half of the movie is the first half again just done faster.

The Road Warrior may have been a simple premise but it made you feel something, even with it's opening narration before any action was even shown. And the supporting characters were given just enough time for each of them to be likable or relatable.

In this movie there is absolutely nothing and no one to care about. We're supposed to care about the characters because... well we should. George Miller just wants us to, and in one of the most cringe worthy moments Charlize Theron's character breaks down while dramatic music plays to try desperately to make us care.

Tom Hardy is pathetic as Max. One of the dullest leading men I've seen in a long time. There's not one single moment throughout the entire movie where he comes anywhere near reaching the same level of charisma Mel Gibson did in the role. Gibson made more of an impression just eating a tin of dog food. I'm still confused as to what accent Hardy was even trying to do.

I was amazed that Max has now become a cartoon character as well. Gibson's Max was a semi-realistic tough guy who hurt, bled, and nearly died several times. Now he survives car crashes and tornadoes with ease?

In the previous movies, fuel and guns and bullets were rare. Not anymore. It doesn't even seem Post-Apocalyptic. There's no sense of desperation anymore and everything is too glossy looking. And the main villain's super model looking wives with their perfect skin are about as convincing as apocalyptic survivors as Hardy's Australian accent is. They're so boring and one-dimensional, George Miller could have combined them all into one character and you wouldn't miss anyone.

Some of the green screen is very obvious and fake looking, and the CGI sandstorm is laughably bad. It wouldn't look out of place in a Pixar movie.

There's no tension, no real struggle, or any real dirt and grit that Road Warrior had. Everything George Miller got right with that masterpiece he gets completely wrong here.

''')

# serialize model to JSON

if not os.path.exists('SaveModel'):

os.makedirs('SaveModel')

model_json = model.to_json()

with open("SaveModel/Imdb_RNN_model.json", "w") as json_file:

json_file.write(model_json)

model.save_weights("SaveModel/Imdb_RNN_model.h5")

print("Saved model to disk")用較大的字典數量,改善準確率 由 scores[1]= 0.81508 改善為 scores[1]= 0.85388

字典數量 3800

數字 list 長度為 380

遞迴神經網路 RNN

自然語言處理的問題中,資料具有順序性,因為 MLP, CNN 都只能依照目前的狀態進行辨識,如果要處理有時間序列的問題,必須改用 RNN, LSTM 模型

RNN 的神經元具有記憶的功能

在 t 時間點

\(X_t\) 是 t 時間點神經網路的輸入

\(O_t\) 是 t 時間點神經網路的輸出

(U, V, W) 是神經網路的參數, W 參數是 t-1 時間點的輸出,作為 t 時間點的輸入

\(S_t\) 是隱藏狀態,代表神經網路的記憶,經過目前時間點的輸入 \(X_t\) 再加上上一個時間點的狀態 \(S_{t-1}\) ,再加上 U, W 參數的評估結果

\(S_t = f([U]X_t+[W]S_{t-1})\) 其中 f 為非線性函數,例如 ReLU

RNN 流程

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding_1 (Embedding) (None, 380, 32) 121600

_________________________________________________________________

dropout_1 (Dropout) (None, 380, 32) 0

_________________________________________________________________

simple_rnn_1 (SimpleRNN) (None, 16) 784

_________________________________________________________________

dense_1 (Dense) (None, 256) 4352

_________________________________________________________________

dropout_2 (Dropout) (None, 256) 0

_________________________________________________________________

dense_2 (Dense) (None, 1) 257

=================================================================

Total params: 126,993

Trainable params: 126,993

Non-trainable params: 0# get imdb data

import urllib.request

import os

import tarfile

# 下載資料集

import os

if not os.path.exists('data'):

os.makedirs('data')

url="http://ai.stanford.edu/~amaas/data/sentiment/aclImdb_v1.tar.gz"

filepath="data/aclImdb_v1.tar.gz"

if not os.path.isfile(filepath):

result=urllib.request.urlretrieve(url,filepath)

print('downloaded:',result)

if not os.path.exists("data/aclImdb"):

tfile = tarfile.open("data/aclImdb_v1.tar.gz", 'r:gz')

result=tfile.extractall('data/')

from keras.datasets import imdb

from keras.preprocessing import sequence

from keras.preprocessing.text import Tokenizer

## rm_tags 可移除文字中的 html tag

import re

def rm_tags(text):

re_tag = re.compile(r'<[^>]+>')

return re_tag.sub('', text)

# filetype 為 train 或 test

import os

def read_files(filetype):

path = "data/aclImdb/"

file_list=[]

# 取得檔案 list

positive_path=path + filetype+"/pos/"

for f in os.listdir(positive_path):

file_list+=[positive_path+f]

negative_path=path + filetype+"/neg/"

for f in os.listdir(negative_path):

file_list+=[negative_path+f]

print('read',filetype, 'files:',len(file_list))

# 產生 labels,前面 12500 筆為 1,後面 12500 筆為 0

all_labels = ([1] * 12500 + [0] * 12500)

# 讀取檔案內容,並去掉 html tag

all_texts = []

for fi in file_list:

with open(fi,encoding='utf8') as file_input:

all_texts += [rm_tags(" ".join(file_input.readlines()))]

return all_labels,all_texts

y_train,train_text=read_files("train")

y_test,test_text=read_files("test")

####

## 資料預處理

token = Tokenizer(num_words=3800)

token.fit_on_texts(train_text)

# 轉成數字 list

x_train_seq = token.texts_to_sequences(train_text)

x_test_seq = token.texts_to_sequences(test_text)

# 截長補短

x_train = sequence.pad_sequences(x_train_seq, maxlen=380)

x_test = sequence.pad_sequences(x_test_seq, maxlen=380)

## 建立模型

from keras.models import Sequential

from keras.layers.core import Dense, Dropout, Activation

from keras.layers.embeddings import Embedding

from keras.layers.recurrent import SimpleRNN

model = Sequential()

# 將 Embedding 層加入模型

# output_dim=32 讓 數字 list 轉輸出成 32 維向量

# input_dim=3800 輸入字典共 3800 字

# input_length=100 因為每一筆資料有 100 個數字

model.add(Embedding(output_dim=32,

input_dim=3800,

input_length=380))

# Dropout 可避免 overfitting

model.add(Dropout(0.2))

#### RNN

model.add(SimpleRNN(units=32))

model.add(Dense(units=256,activation='relu' ))

model.add(Dropout(0.2))

model.add(Dense(units=1,activation='sigmoid' ))

print(model.summary())

### 訓練模型

model.compile(loss='binary_crossentropy',

optimizer='adam',

metrics=['accuracy'])

# validation_split=0.2 80% 是訓練資料, 20% 是驗證資料

train_history =model.fit(x_train, y_train,batch_size=100,

epochs=10,verbose=2,

validation_split=0.2)

####################

## 這兩行可解決 ModuleNotFoundError: No module named '_tkinter'

## ref: https://stackoverflow.com/questions/36327134/matplotlib-error-no-module-named-tkinter

import matplotlib

matplotlib.use('agg')

####

import matplotlib.pyplot as plt

def show_train_history(train_acc,test_acc, filename):

plt.clf()

plt.gcf()

plt.plot(train_history.history[train_acc])

plt.plot(train_history.history[test_acc])

plt.title('Train History')

plt.ylabel('Accuracy')

plt.xlabel('Epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.savefig(filename)

show_train_history('acc','val_acc', 'accuracy.png')

show_train_history('loss','val_loss', 'loss.png')

######

# 評估模型準確率

print()

scores = model.evaluate(x_test, y_test, verbose=1)

print("scores[1]=", scores[1])

## scores[1]= 0.83972

####

# 預測機率

probility=model.predict(x_test)

for p in probility[12500:12510]:

print(p)

## 預測結果

print()

predict=model.predict_classes(x_test)

predict_classes=predict.reshape(-1)

print( predict_classes[:10] )

## 查看預測結果

SentimentDict={1:'正面的',0:'負面的'}

def display_test_Sentiment(i):

print()

print(test_text[i])

print('標籤label:',SentimentDict[y_test[i]],

'預測結果:',SentimentDict[predict_classes[i]])

display_test_Sentiment(2)

display_test_Sentiment(12502)

#預測新的影評

def predict_review(input_text):

input_seq = token.texts_to_sequences([input_text])

pad_input_seq = sequence.pad_sequences(input_seq , maxlen=380)

predict_result=model.predict_classes(pad_input_seq)

print()

print(SentimentDict[predict_result[0][0]])

predict_review('''

Oh dear, oh dear, oh dear: where should I start folks. I had low expectations already because I hated each and every single trailer so far, but boy did Disney make a blunder here. I'm sure the film will still make a billion dollars - hey: if Transformers 11 can do it, why not Belle? - but this film kills every subtle beautiful little thing that had made the original special, and it does so already in the very early stages. It's like the dinosaur stampede scene in Jackson's King Kong: only with even worse CGI (and, well, kitchen devices instead of dinos).

The worst sin, though, is that everything (and I mean really EVERYTHING) looks fake. What's the point of making a live-action version of a beloved cartoon if you make every prop look like a prop? I know it's a fairy tale for kids, but even Belle's village looks like it had only recently been put there by a subpar production designer trying to copy the images from the cartoon. There is not a hint of authenticity here. Unlike in Jungle Book, where we got great looking CGI, this really is the by-the-numbers version and corporate filmmaking at its worst. Of course it's not really a "bad" film; those 200 million blockbusters rarely are (this isn't 'The Room' after all), but it's so infuriatingly generic and dull - and it didn't have to be. In the hands of a great director the potential for this film would have been huge.

Oh and one more thing: bad CGI wolves (who actually look even worse than the ones in Twilight) is one thing, and the kids probably won't care. But making one of the two lead characters - Beast - look equally bad is simply unforgivably stupid. No wonder Emma Watson seems to phone it in: she apparently had to act against an guy with a green-screen in the place where his face should have been.

''')

predict_review('''

It's hard to believe that the same talented director who made the influential cult action classic The Road Warrior had anything to do with this disaster.

Road Warrior was raw, gritty, violent and uncompromising, and this movie is the exact opposite. It's like Road Warrior for kids who need constant action in their movies.

This is the movie. The good guys get into a fight with the bad guys, outrun them, they break down in their vehicle and fix it. Rinse and repeat. The second half of the movie is the first half again just done faster.

The Road Warrior may have been a simple premise but it made you feel something, even with it's opening narration before any action was even shown. And the supporting characters were given just enough time for each of them to be likable or relatable.

In this movie there is absolutely nothing and no one to care about. We're supposed to care about the characters because... well we should. George Miller just wants us to, and in one of the most cringe worthy moments Charlize Theron's character breaks down while dramatic music plays to try desperately to make us care.

Tom Hardy is pathetic as Max. One of the dullest leading men I've seen in a long time. There's not one single moment throughout the entire movie where he comes anywhere near reaching the same level of charisma Mel Gibson did in the role. Gibson made more of an impression just eating a tin of dog food. I'm still confused as to what accent Hardy was even trying to do.

I was amazed that Max has now become a cartoon character as well. Gibson's Max was a semi-realistic tough guy who hurt, bled, and nearly died several times. Now he survives car crashes and tornadoes with ease?

In the previous movies, fuel and guns and bullets were rare. Not anymore. It doesn't even seem Post-Apocalyptic. There's no sense of desperation anymore and everything is too glossy looking. And the main villain's super model looking wives with their perfect skin are about as convincing as apocalyptic survivors as Hardy's Australian accent is. They're so boring and one-dimensional, George Miller could have combined them all into one character and you wouldn't miss anyone.

Some of the green screen is very obvious and fake looking, and the CGI sandstorm is laughably bad. It wouldn't look out of place in a Pixar movie.

There's no tension, no real struggle, or any real dirt and grit that Road Warrior had. Everything George Miller got right with that masterpiece he gets completely wrong here.

''')

# serialize model to JSON

if not os.path.exists('SaveModel'):

os.makedirs('SaveModel')

model_json = model.to_json()

with open("SaveModel/Imdb_RNN_model.json", "w") as json_file:

json_file.write(model_json)

model.save_weights("SaveModel/Imdb_RNN_model.h5")

print("Saved model to disk")

LSTM 模型

RNN 在訓練時,會發生 long-term dependencies 的問題,這是因為 RNN 會遇到梯度消失或爆炸 vanishing/exploding 的問題,訓練時在計算與反向傳播,梯度傾向於在每一個時刻遞增或遞減,經過一段時間後,會發散到無限大或收斂到 0

long-term dependencies 的問題,就是在每一個時間的間隔不斷增加時, RNN 會失去學習到連接遠的訊息的能力。如下圖: 隨著時間點 t 不段遞增,到了後期 t,隱藏狀態 \(S_t\) 已經失去學習 \(X_0\) 的能力。導致神經網路不能知道,我是在台北市市政府上班。

RNN 只能取得短期記憶,不記得長期記憶,因此有 LSTM 模型解決此問題

LSTM 中,一個神經元相當於一個記憶細胞 cell

- \(X_t\) 輸入向量

- \(Y_t\) 輸出向量

- \(C_t\): cell ,是 LSTM 的記憶細胞狀態 cell state

- LSTM 利用 gate 機制,控制記憶細胞狀態,刪減或增加裡面的訊息

- \(I_t\) Input Gate 用來決定哪些訊息要增加到 cell

- \(F_t\) Forget Gate 決定哪些訊息要被刪減

- \(O_t\) Output Gate 決定要從 cell 輸出哪些訊息

有了 Gate, LSTM 就能記住長期記憶

程式只需要修改 model

model = Sequential()

# 將 Embedding 層加入模型

# output_dim=32 讓 數字 list 轉輸出成 32 維向量

# input_dim=3800 輸入字典共 3800 字

# input_length=100 因為每一筆資料有 100 個數字

model.add(Embedding(output_dim=32,

input_dim=3800,

input_length=380))

# Dropout 可避免 overfitting

model.add(Dropout(0.2))

#### LSTM

model.add( LSTM(32) )

model.add(Dense(units=256,activation='relu' ))

model.add(Dropout(0.2))

model.add(Dense(units=1,activation='sigmoid' ))評估模型準確率:

MLP 100

scores[1]= 0.81508MLP 380

scores[1]= 0.84252RNN

scores[1]= 0.83972LSTM

scores[1]= 0.85792